Low-code MVP Development for Startups (2026 Guide)

22 min

read

A 2026 guide to low-code MVP development for startups. Learn how to validate ideas faster, cut build costs, and launch without overengineering.

What a Low-code MVP Is and Why Startups Use It

Most startups fail not because they build badly, but because they build the wrong thing. Studies consistently show that over 60% of startups fail due to lack of market need.

A low-code MVP exists to prevent exactly that outcome by forcing learning early, before costs and complexity pile up.

It is a validation tool, not a tech shortcut.

- A low-code MVP is designed to test one risky assumption fast

Instead of building a full product, it focuses on validating demand, workflow fit, or willingness to pay through real user behavior, not opinions or surveys. - It turns guesses into measurable actions

A low-code MVP lets you observe what users actually do, such as completing a task, returning, or paying, which is far more reliable than verbal feedback. - It is fundamentally different from prototypes or clickable demos

Prototypes show intent. Low-code MVPs reveal friction, confusion, drop-offs, and repeat usage, which are the signals founders need to make hard decisions. - It intentionally avoids scale, polish, and edge cases

Performance tuning, complex roles, and future-proof architecture are skipped so weak ideas fail early and strong ones earn deeper investment. - Startups use low-code to reduce early-stage risk and cost

Visual development and integrations shorten build cycles, allowing faster experiments and quicker decisions, following the same validation-first thinking outlined in this no-code MVP guide.

A low-code MVP answers one question clearly: should this idea move forward at all? That clarity is why startups use it before committing serious time, money, and focus.

When Low-code Is the Right (and Wrong) Choice for Your MVP

Low-code is powerful, but only when used for the right job. Many startups misuse it by forcing it into problems it is not meant to solve. The real decision is not low-code versus traditional code. It is learning speed versus long-term optimization.

Choosing correctly saves months of rework.

- Low-code works best when validation speed matters most

If your biggest risk is demand, workflow fit, or willingness to pay, low-code lets you test real usage quickly without committing to heavy engineering or long build cycles. - Ideal for early-stage startups testing unclear assumptions

Startups with unknown users, fuzzy requirements, or evolving ideas benefit most, because low-code MVPs adapt easily as learning changes direction. - Low-code fits products with clear workflows and integrations

Apps built around forms, dashboards, automations, and APIs are well suited, making it easier to validate behavior instead of infrastructure choices. - Traditional development is better when scale is already proven

If you already have traction, strict performance needs, or deep system-level requirements, custom development provides more control and optimization. - Low-code is not ideal for deeply technical or real-time systems

Products requiring heavy real-time processing, custom hardware interaction, or advanced graphics often exceed what low-code should handle at the MVP stage. - Validation speed should come before long-term scalability

Premature optimization slows learning. Many teams validate first with low-code, then transition thoughtfully, as outlined in this startup MVP development guide.

Low-code is a decision tool, not a permanent commitment. When used at the right stage, it helps startups learn faster and invest with confidence instead of guessing early.

Before You Build: Validating the Low-code MVP Idea

Most startups do not fail at execution. They fail because they start building before they understand what actually needs to be proven. Validation is not about slowing down. It is about making sure every build step produces learning instead of false confidence.

Before you build anything, you need evidence, not enthusiasm.

- Identify the real problem users experience repeatedly

Go beyond surface complaints and understand how often the problem occurs, how it affects daily work, and what users currently do to cope without your product. - Separate the problem from your proposed solution

Founders often fall in love with solutions. Validation starts by confirming the problem exists independently of your idea and is painful enough to justify change. - Map assumptions that could invalidate the entire idea

Write down assumptions about user type, urgency, workflow fit, and willingness to pay. These are risks, not facts, and each one must be tested deliberately. - Define what evidence would make you confident to proceed

Decide upfront what success looks like, such as repeated usage, completed workflows, or payments, so validation results guide decisions instead of emotions. - Avoid building until learning goals are explicit

If you cannot clearly state what you are trying to learn from an MVP, building will only add complexity without reducing uncertainty. - Use low-code MVPs as controlled learning experiments

Low-code allows you to test assumptions through real usage quickly, using the same approach outlined in this guide on validating startup ideas with low-code MVPs.

Validation changes the question from “Can we build this?” to “Should we build this at all?” That shift protects time, capital, and founder focus before real commitment begins.

Defining the Right MVP Scope for Startups

Overbuilding is one of the fastest ways to kill learning. Many startups fail not because the idea was bad, but because the MVP tried to do too much too early. A strong MVP scope is intentionally narrow and uncomfortable by design.

Scope is about learning, not completeness.

- An MVP must include only what proves the core assumption

Every feature should exist to test one risky belief, such as whether users complete a task, return again, or pay for solving that specific problem. - An MVP must exclude polish, edge cases, and future ideas

Design perfection, advanced permissions, scalability concerns, and “nice-to-have” features hide weak signals and slow down validation without improving decision quality. - Translate assumptions directly into testable features

If you assume users need faster reporting, the MVP feature should deliver one report end to end, not a full analytics dashboard with multiple options. - Build for one primary user and one main workflow

Supporting multiple user types or workflows early dilutes learning and makes it unclear which behavior validates or invalidates the startup idea. - Use constraints to force clarity

Limiting time, budget, and feature count forces tough prioritization decisions and reveals what truly matters to users versus what founders only assume matters. - Use a structured feature selection process

A disciplined approach like the one explained in this guide on how to choose MVP features helps teams avoid emotional or opinion-driven scope decisions.

A well-scoped MVP feels incomplete on purpose. That discomfort is a signal you are prioritizing learning over comfort, which is exactly what early-stage startups need.

Step-by-Step Low-code MVP Development Process

A low-code MVP works only when the process is deliberate. Speed alone does not create learning. This step-by-step approach keeps founders focused on reducing uncertainty at each stage, instead of rushing toward features or polish too early.

Each step below is designed to produce clear evidence.

Problem framing and research

This stage defines whether your MVP solves a real, recurring problem or just an interesting idea.

- Clarify the exact problem in real-world terms

Describe who experiences the problem, how often it occurs, and what happens when it is not solved. Vague pain leads to vague MVPs. - Study how users solve the problem today

Existing workarounds like spreadsheets, emails, or manual checks reveal what users tolerate and what they are willing to change. - Identify moments of friction, delay, or cost

Look for steps that waste time, create errors, or slow decisions. These moments are where MVP value usually lives. - Capture language users naturally use

The words users repeat help shape MVP flows, labels, and validation metrics later. - Define the learning goal before building anything

If you cannot state what must be proven, the MVP will drift toward building instead of learning.

Feature prioritization forLow-code MVP

This step prevents overbuilding by linking features directly to assumptions.

- List assumptions before listing features

Write down beliefs about usage, frequency, urgency, and willingness to pay. Features should exist only to test these beliefs. - Choose one core workflow to validate first

Your MVP should deliver one outcome clearly, not multiple partial experiences that confuse validation signals. - Cut features that do not create measurable behavior

If a feature does not help you observe activation, completion, or repeat usage, it does not belong in the MVP. - Design features to fail fast if the idea is weak

A good MVP exposes lack of demand quickly instead of hiding it behind extra options or complexity. - Accept that discomfort is part of good scope

If the MVP feels incomplete, it usually means scope is right.

Platform and architecture decisions

This stage balances learning speed with technical sanity.

- Select the platform based on validation needs

Logic-heavy workflows may suit Bubble, mobile-first tests may fit FlutterFlow, and internal tools often work best with Glide. - Define only the data structures needed for learning

Focus on entities required to track behavior, not future reporting or scalability assumptions. - Avoid premature optimization or extensibility

Performance tuning and future-proof architecture slow learning and are unnecessary before validation. - Limit integrations to what unlocks the core workflow

Each integration adds risk. Include only those that directly support the validation goal. - Keep architecture simple enough to change quickly

The MVP should be easy to modify, break, or rebuild as learning evolves.

Building workflows and logic

This stage turns assumptions into a usable product.

- Build the happy path first, without exceptions

Focus on the simplest path that delivers value before handling errors or edge cases. - Make user actions visible and measurable

Every meaningful action should be tracked so behavior becomes evidence, not guesswork. - Design workflows that guide users naturally

Clear next steps matter more than feature richness during early validation. - Avoid advanced logic unless it proves the core value

Complexity hides weak signals and delays learning. - Expect to rebuild parts quickly

MVP workflows are temporary by design and should be easy to change.

Internal testing before launch

This stage protects validation from false negatives.

- Test the MVP end-to-end using realistic scenarios

Simulate real user behavior, not ideal cases, to uncover friction early. - Validate tracking and metrics accuracy

Broken analytics can make strong ideas look weak or hide real demand. - Remove internal confusion before exposing users

If your team struggles to use it, users will abandon it immediately. - Prepare fast iteration workflows before launch

Decide how changes will be made quickly once real feedback starts coming in. - Set expectations for learning, not perfection

Internal testing ensures the MVP is usable enough to learn from, not flawless.

For a deeper breakdown of this execution approach, refer to this internal guide on the MVP development process.

A strong low-code MVP process is not about speed alone. It is about creating clarity at every step so founders can move forward with confidence instead of assumptions.

Choosing the Right Low-code Platform for Your MVP

Many MVPs fail because the tool was chosen before the problem was clear. Platforms do not validate ideas. Decisions do. The right low-code platform supports your learning goal, not your future architecture or tech preferences.

Tool choice should follow validation needs.

- Choose the platform based on the product type you are testing

Logic-heavy SaaS products often need flexible data models and workflows, while simple internal tools or dashboards benefit from faster, more constrained platforms. - Match logic-heavy MVPs with platforms built for complexity

If your MVP requires conditional flows, permissions, and complex logic, platforms like Bubble offer better control, as explained in this Bubble MVP app development guide. - Use workflow-driven platforms for operational validation

MVPs focused on approvals, task tracking, or internal processes often validate faster on simpler platforms where speed matters more than customization. - Decide based on integration and data requirements

If validation depends on pulling data from APIs, CRMs, or third-party tools, choose a platform that handles integrations reliably without heavy custom work. - Avoid choosing tools based on hype or future scale

Early MVPs rarely fail due to platform limits. They fail because the problem was wrong or unclear. Optimize for learning speed, not long-term perfection.

The right low-code platform disappears into the background. When chosen correctly, it lets you focus on users, behavior, and decisions instead of fighting tooling choices too early.

Designing for Usability, Not Perfection

Early MVP design fails when founders chase polish instead of clarity. Users do not leave because a product looks unfinished. They leave because they do not understand what to do next. MVP design should remove confusion, not impress.

Usability creates learning. Perfection hides it.

- Design for clear actions, not visual elegance

Every screen should make the next step obvious. If users hesitate or ask questions, the design has already failed its validation purpose. - Optimize for one primary workflow at a time

MVP UX should guide users through a single core task without distractions, secondary navigation, or unnecessary choices that dilute behavior signals. - Reuse components to stay consistent and fast

Reusable buttons, forms, and layouts reduce cognitive load for users and make iteration faster when feedback requires changes. - Avoid early design debt by skipping custom styling

Custom visuals, animations, and branding slow changes and create attachment. MVPs should be easy to modify or discard without emotional cost. - Design for real usage, not demo scenarios

Think about interruptions, mistakes, and incomplete actions. Usable MVPs handle partial flows better than visually polished but rigid interfaces. - Web-first MVPs benefit from simpler UX patterns

For browser-based products, proven web patterns reduce friction and speed learning, as outlined in this guide on web MVP development.

Good MVP design feels boring on purpose. When usability is clear, learning becomes visible, and that is what moves startups forward.

Launching the MVP and Collecting Real Feedback

An MVP only becomes valuable once real users touch it. Launching is not about traffic or announcements. It is about putting the product in the hands of the right people and watching what actually happens, not what they say will happen.

Launch is where learning begins.

- Start with a soft launch, not a public release

Share the MVP with a small, relevant user group so feedback stays focused. Broad launches hide weak signals behind noise and vanity metrics. - Design onboarding to expose friction early

Early onboarding should guide users into the core workflow fast. Where users hesitate, drop off, or ask questions reveals usability and value gaps. - Observe behavior before asking for opinions

What users click, skip, repeat, or abandon matters more than verbal feedback. Behavior shows whether the problem is real and urgent. - Balance qualitative insight with quantitative signals

Conversations explain why users act a certain way, while metrics like activation and repeat usage show whether the MVP truly delivers value. - Compare signals against real-world patterns

Studying outcomes from similar MVP launches helps separate normal early friction from true lack of demand, as seen across these MVP case studies.

A strong MVP launch is quiet, controlled, and intentional. When feedback comes from real usage, decisions become clearer and far less emotional.

Measuring Low-code MVP Success (What Metrics Actually Matter)

Most founders measure the wrong things early. Page views, sign-ups, or likes feel good but do not validate a startup idea. A low-code MVP succeeds only when it produces clear signals that help you decide what to do next.

Metrics exist to reduce doubt, not boost confidence.

- Activation shows whether users reach real value

Activation is the moment users complete the core action your MVP promises. If users sign up but never activate, the problem or workflow is likely weak. - Usage patterns matter more than raw numbers

How often users return, which actions they repeat, and where they stop tells you far more than total user count in early-stage MVP validation. - Retention beats vanity metrics every time

A small group of users returning consistently is a stronger signal than hundreds of one-time users. Retention shows real problem fit. - Time-to-value reveals urgency

If users reach value quickly without guidance, the problem is likely real. Long delays often signal confusion or low priority. - Decide when data is strong enough to act

When behavior repeats across users and sessions, you have enough evidence to iterate, scale, pivot, or stop with confidence.

Low-code MVP success is not about growth charts. It is about clarity. When the metrics tell a consistent story, decision-making becomes calm and objective.

Iterating, Pivoting, or Stopping After MVP Results

The hardest part of MVP development is not building. It is deciding what to do after the results are in. Low-code MVPs exist to create clarity, even when that clarity means stopping.

Every outcome is progress if you act on it.

- Iterate when the core problem is validated but execution is weak

If users want the outcome but struggle with usability, speed, or clarity, refine workflows, onboarding, or messaging instead of changing the idea. - Pivot when signals show interest but from the wrong angle

When usage appears in an unexpected user group or workflow, adjust the problem framing or audience rather than forcing the original plan. - Stop when behavior shows low urgency or repeat usage

If users do not return, pay, or rely on the MVP despite improvements, stopping protects time and capital better than continued iteration. - Avoid emotional attachment to sunk costs

The purpose of MVP validation is to save resources. Walking away early is a successful outcome when evidence supports it. - Use results to guide long-term product direction

Strong validation should inform architecture, investment, and roadmap decisions, following the same path outlined in this guide on developing a successful minimum viable product.

Post-MVP decisions define startup maturity. When choices are driven by evidence instead of hope, founders move forward with confidence instead of regret.

Cost Expectations for Low-code MVP Development

Cost confusion often leads founders to overbuild or delay learning. A low-code MVP exists to control spend while testing real demand. Understanding where money actually goes helps you budget for learning, not false certainty.

Early cost clarity prevents expensive mistakes.

- Typical low-code MVPs cost between $20k and $45k

Most startups can validate a core workflow, user behavior, and willingness to pay within this range, without building production-grade systems too early. - Costs rise only when you choose to scale the product

Expanding features, performance optimization, security hardening, and multi-role access increase budgets after validation, not before it. - Key cost drivers are scope, integrations, and data complexity

MVP cost is influenced more by workflow depth and external integrations than by screens or UI, which is why scope discipline matters early. - Low-code avoids early engineering overhead

You skip infrastructure setup, large dev teams, and long build cycles, keeping the focus on validating assumptions instead of managing complexity. - Traditional custom MVPs cost significantly more upfront

Custom development often starts at $40k–$80k for an MVP, and full versions can reach $120k–$180k before demand is clearly proven. - Low-code reduces long-term risk, not just initial spend

By validating early, startups avoid sinking large budgets into ideas that lack traction, as explained in this comparison of low-code vs custom MVP development costs.

Low-code MVP costs are not about being cheap. They are about spending just enough to learn the truth before committing real capital to scale.

Common Mistakes Startups Make with Low-code MVPs

Low-code MVPs fail for the same reasons traditional MVPs fail. The problem is rarely the tool. It is how founders think about validation, learning, and decision-making. These mistakes are avoidable once you know what to watch for.

Most failure comes from misuse, not limitation.

- Treating the MVP like a final product

Adding polish, edge cases, and full feature sets too early hides weak demand signals and turns a learning tool into an expensive guessing exercise. - Choosing low-code tools before defining the problem

When platform decisions come first, MVPs are shaped by tool constraints instead of validation needs, leading to irrelevant features and unclear outcomes. - Over-engineering workflows instead of testing behavior

Complex logic and automation slow iteration and make it harder to understand which actions actually matter for validating the startup idea. - Ignoring negative signals or low engagement

Founders often explain away weak usage instead of accepting it as data. MVPs exist to surface bad news early, not to confirm beliefs. - Measuring success with vanity metrics

Sign-ups and page views feel positive but rarely indicate demand. Real validation comes from repeat usage, task completion, or willingness to pay. - Failing to adapt based on what users actually do

When feedback and behavior are collected but not acted on, the MVP becomes static and loses its purpose as a learning instrument.

These patterns appear repeatedly in early-stage products, which is why this guide on MVP development challenges and mistakes exists.

Low-code MVPs work when founders stay disciplined. When learning comes first and ego stays out of the way, mistakes become insights instead of sunk costs.

What Comes After a Validated Low-code MVP

Validation is not the end. It is the point where uncertainty drops and real product decisions begin. A validated low-code MVP gives you evidence to plan calmly instead of reacting emotionally.

The next steps should be intentional, not rushed.

- Turn validation signals into a clear product roadmap

Use what users actually did, not what they asked for, to decide which workflows to deepen, which features to refine, and which ideas to permanently discard. - Decide what “scaling” really means for your product

Scaling may mean more users, deeper usage, better reliability, or new segments. Do not assume growth automatically requires rewriting everything. - Strengthen the core workflow before adding new ones

Double down on the validated use case. Expanding too early weakens focus and often recreates pre-validation uncertainty. - Evaluate whether low-code still supports your needs

Many products stay on low-code longer than expected. Move to custom development only when performance, control, or complexity becomes a real constraint. - Plan technical evolution based on proven demand

Architecture, security, and optimization decisions should follow traction, not precede it. This is especially important for SaaS paths, as explained in this SaaS MVP development guide.

A validated MVP earns the right to scale. When decisions are guided by evidence instead of pressure, growth becomes strategic instead of risky.

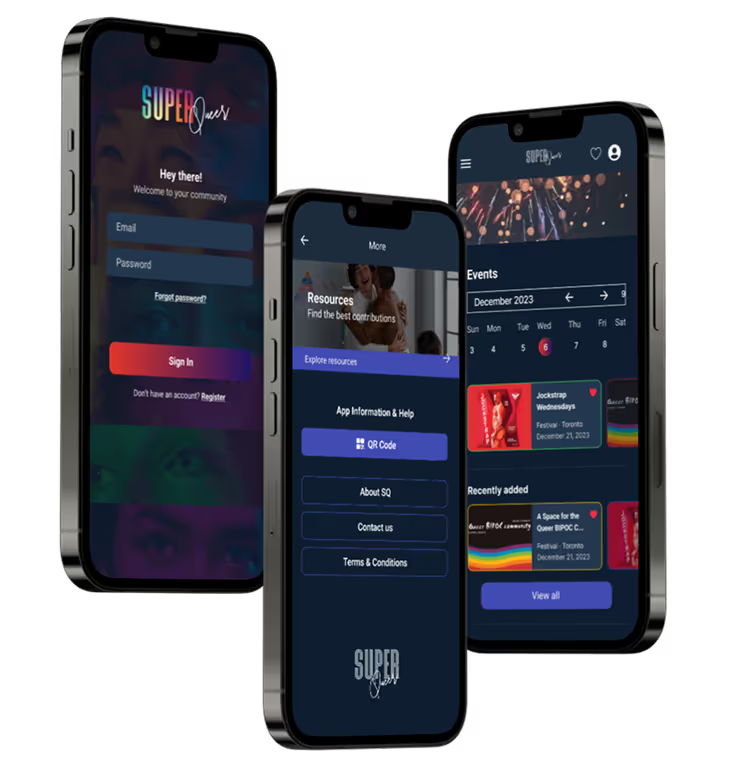

Why Founders Choose LowCode Agency for Low-code MVPs

Founders do not come to LowCode Agency just to “build an MVP.”

They come when they want to avoid wasting months on the wrong product. Our role is to reduce uncertainty early and help you make confident decisions before scale, hiring, or heavy investment.

We operate as your product team, not a software delivery vendor.

- We help you decide what is worth building before anything is built

We start by pressure-testing the problem, user, and assumptions so the MVP exists to answer specific questions, not to satisfy a vague idea or investor narrative. - We design MVPs to expose truth, not hide it

Every workflow is intentionally scoped to reveal demand, friction, and willingness to pay. We avoid polish and excess features that delay or distort validation signals. - We bring experience from 350+ shipped products into early decisions

That experience helps us spot patterns founders usually miss, like false traction, misleading engagement, or ideas that feel good but do not survive real usage. - We choose Bubble, FlutterFlow, Glide, Make, or n8n based on learning goals

Tools are selected based on what you need to validate fastest, whether that is logic-heavy behavior, internal workflows, automation, or simple user adoption. - We stay involved after launch to interpret results correctly

Many founders misread MVP data. We help you understand whether signals point to iteration, narrowing scope, scaling, or walking away with clarity. - We protect you from building the wrong thing too well

Our job is not to maximize feature output. It is to minimize regret by stopping weak ideas early and doubling down only when evidence earns it.

If you want an MVP that gives you answers instead of false confidence, the next step is a focused, honest conversation — let’s discuss.

Conclusion

Low-code MVPs are not shortcuts to success. They are tools for learning. When used correctly, they help founders replace assumptions with evidence before time, money, and focus are fully committed.

Low-code reduces risk, but it does not replace thinking. Clear problems, clear scope, and clear metrics still matter more than any platform choice.

In the end, clear decisions beat fast launches. The startups that win are the ones that learn early, decide calmly, and build only what has earned the right to exist.

Created on

December 31, 2025

. Last updated on

December 31, 2025

.

FAQs

What is a low-code MVP?

Is low-code suitable for non-technical startup founders?

How small should a startup MVP be?

How long does it take to build a low-code MVP?

How do you know if an MVP is successful?

When should a startup move beyond a low-code MVP?