How to Validate Startup Ideas Using Low-code MVPs

32 min

read

Validate startup ideas faster using low-code MVPs. Learn how founders test demand, reduce risk, and decide whether to build, pivot, or stop early.

Why Startup Idea Validation Matters Before You Build Anything

Most startup ideas fail because they are built on assumptions, not real proof. Teams often rush into design and development without knowing if the problem is real or if users care enough. Validation exists to reduce this risk before time, money, and focus are locked into the wrong direction.

This step is not about slowing you down. It is about making sure every next step is worth taking, especially when resources are limited.

- Most startup ideas fail without early validation

Many products fail after launch because demand was assumed, not tested. Early validation exposes weak interest, unclear value, or poor timing before costs and complexity increase. - Assumptions feel confident, evidence comes from real behavior

Assumptions are opinions based on belief. Evidence comes from user actions like sign-ups, usage, or drop-offs. Validation replaces guesswork with observable signals. - Validation is a decision step, not a formality

The goal is not to prove the idea right. It is to decide whether to move forward, pivot, or stop before deeper investment begins. - Low-code changes speed, risk, and learning

Low-code MVPs let you test real workflows quickly, learn from actual users, and reduce technical risk while keeping validation practical and affordable.

Validating early protects you from building the wrong thing. With low-code, validation becomes a fast learning loop instead of a long, expensive gamble.

What Validation Really Means (And What It Does Not)

Validation is often misunderstood, especially in early startup stages. Many founders believe that positive comments, likes, or friendly feedback mean their idea is validated. In reality, validation is about proving that a real problem exists and that people are willing to change behavior to solve it.

True validation removes emotion from decision-making. It focuses on signals that show demand, urgency, and willingness to engage, not just interest or encouragement.

- Validation is different from opinions and casual feedback

Opinions are easy to give and cost nothing. Validation requires users to take real actions that involve time, effort, or money, showing genuine interest. - Real validation signals come from user behavior

Strong signals include sign-ups, repeated usage, waitlists, referrals, or payments. These actions prove the problem matters enough to act on. - People liking the idea is not validation

Users may say an idea sounds good but never use it. Polite encouragement does not predict adoption or long-term demand. - Validation proves the problem, not feature depth

Early validation focuses on whether the problem is painful and frequent. Feature richness can wait until the problem itself is clearly confirmed.

Good validation gives you clarity, not compliments. It helps you decide what deserves to be built and what should be stopped early.

When a Low-code MVP Is the Right Validation Tool

Not every idea needs a full product to validate, but some ideas cannot be tested with interviews or landing pages alone. When the value depends on real usage, workflows, or repeated actions, a low-code MVP becomes the most reliable validation tool. It lets you test behavior instead of promises.

Low-code MVPs are especially useful when you need to see how users interact with a solution in real conditions, not how they react to a concept slide or signup form.

- Low-code MVPs outperform interviews and landing pages

Interviews capture intent, not action. Landing pages test interest, not usage. A low-code MVP shows how users behave when the solution is real and usable. - Best suited for workflow-driven and operational ideas

Ideas involving processes, coordination, or daily tasks need real interaction. Low-code MVPs reveal friction points that feedback alone cannot surface. - Strong fit for early SaaS and internal tools

SaaS products, internal systems, and dashboards benefit from live usage data. Low-code allows fast testing without engineering overhead or long build cycles. - Ideal for niche platforms and focused use cases

Niche marketplaces, vertical tools, or role-specific apps validate faster when users can actually try them, not just imagine them.

If you are unsure how to structure this stage, this low-code MVP development guide explains how teams approach validation without overbuilding.

Low-code MVPs turn validation into observation, not assumption. You learn faster because users show you the truth through how they use the product.

Pre-MVP Validation Steps You Should Not Skip

Before building anything, you need shared clarity on what you are testing and why. Many MVPs fail because teams rush into tools without aligning on the real problem. Pre-MVP validation creates focus so your MVP answers one meaningful question instead of spreading effort across guesses.

This stage protects you from building fast in the wrong direction.

- Define the exact problem and the specific target user

Clearly identify who has the problem, when it appears, and why it hurts. Vague problems create MVPs that cannot confirm demand or urgency. - List assumptions that must be proven, not debated

Write down risky beliefs about user behavior, willingness to switch, or frequency of pain. Each assumption should be testable through real actions. - Talk to real users before writing specs or flows

Early conversations expose how users describe the problem and what they already try. This prevents building features based on internal language instead of reality. - Identify the single problem worth testing first

Strong MVPs validate one critical risk. Pick the assumption that would kill the idea if proven wrong and test only that.

If you want a structured way to move from problem clarity to execution, this startup MVP development guide explains how teams narrow scope before building.

Strong pre-MVP validation creates focus and discipline. That focus is what makes low-code MVPs fast learning tools instead of rushed experiments.

How to Decide What Your MVP Should (and Should Not) Include

One of the biggest mistakes founders make is treating an MVP like a small version of the final product. An MVP is not about scale, polish, or completeness. It exists to answer one question: does this idea have real demand worth pursuing?

Good MVP scoping is about subtraction. You remove anything that does not help you learn, and keep only what proves or disproves your core assumption.

- MVP scope should focus on validation, not scale

Your MVP should test demand and behavior, not performance or growth. Scalability, edge cases, and advanced features can wait until the problem is proven. - Cut features that do not prove demand

If a feature does not help confirm user interest, urgency, or willingness to act, it adds noise. Extra features slow learning and hide weak signals. - Turn risky assumptions into testable MVP features

Each MVP feature should exist to test one assumption. If users engage with it, your assumption gains evidence. If not, you get clarity fast. - Avoid feature creep at the earliest stage

Feature creep happens when teams try to please everyone. Early MVPs should feel narrow and opinionated, not flexible or complete.

If you struggle with this step, this guide on how to choose MVP features explains how teams separate learning-focused features from distractions.

A focused MVP gives you honest answers quickly. The fewer features you include, the clearer your validation signals become.

Common Low-code MVP Validation Patterns That Work

Different ideas fail for different reasons. Some fail due to no demand, others due to workflow mismatch, and some because users never change behavior. Low-code MVP patterns work when each one is used to test a specific risk clearly and quickly.

Choosing the wrong pattern gives you misleading signals, even if the build is fast.

Landing Page + Workflow MVP

This pattern works when interest alone is not enough and you need to see users complete a meaningful action tied to the core value.

- Tests intent beyond curiosity

Users must complete a real step such as onboarding or submitting data, proving they are willing to engage beyond liking the idea. - Validates messaging and flow together

You learn whether users understand the value and can successfully move through the first workflow without explanation or hand-holding. - Reveals early drop-off points

Abandonment shows where the idea breaks down, helping you identify confusion or weak motivation before building deeper functionality.

This pattern is ideal when demand clarity is the biggest unknown.

Concierge or Manual MVP

Concierge MVPs are useful when automation risk is high but the value promise is clear. You deliver results manually while observing real usage.

- Validates willingness to use before automation

Users interact with a simple interface while you perform the work manually, proving demand without committing to complex systems. - Surfaces real expectations and edge cases

Manual delivery reveals what users truly care about, what they ignore, and what creates friction during real interactions. - Prevents building unnecessary automation

You learn which steps must be automated later and which can remain simple or removed entirely.

This pattern works best when operational complexity is uncertain.

Wizard of Oz MVP

Wizard of Oz MVPs test perceived value and trust by making the product feel complete while keeping backend logic intentionally lightweight.

- Tests user expectations without full backend investment

Users believe the system is automated while processes run manually, helping validate perceived intelligence or sophistication. - Reveals trust and credibility gaps early

You learn whether users trust the product enough to rely on it, share data, or repeat usage. - Reduces technical risk during validation

Complex logic stays manual until user behavior confirms it is worth automating.

This pattern fits ideas where experience matters more than implementation.

Internal Tool MVPs

Internal MVPs are ideal when the product targets operational workflows, coordination, or efficiency inside teams.

- Validates real workflow fit inside daily operations

Teams use the MVP during actual work, revealing whether it saves time or adds friction. - Exposes adoption resistance early

You observe shortcuts, workarounds, and avoidance behaviors that indicate poor fit or unclear value. - Helps define success metrics clearly

Internal usage makes it easier to measure time saved, errors reduced, or clarity improved.

If you want help choosing the right pattern for your idea, this no-code MVP guide explains how teams match validation methods to risk types.

Strong validation patterns give you signal, not noise. The right pattern helps you learn faster and avoid building confidence on false positives.

Choosing the Right Low-code Platform for Validation

The platform you choose during validation directly affects what you learn and how fast you learn it. Many founders pick tools based on hype, future scale, or popular opinions. For validation, the right choice depends only on the risk you are testing right now.

Your goal is not to build the final system. Your goal is to expose truth early.

Bubble for Logic-Heavy MVP Validation

Bubble is best when validation depends on complex logic, workflows, or data relationships that must behave realistically for users to trust the product.

- Best for testing complex workflows and rules

Bubble allows realistic permissions, conditions, and multi-step logic, making it ideal when validation depends on whether the system actually works as expected. - Helps validate behavior, not just interest

Logic-heavy MVPs need real interactions. This Bubble MVP app development guide explains how teams validate behavior before worrying about scale.

Bubble is the right choice when fake flows would give false validation signals.

FlutterFlow for Mobile-First Validation

FlutterFlow works well when the core risk is mobile usability, speed, or repeated usage in real-world environments.

- Ideal for mobile-first user behavior testing

FlutterFlow helps validate whether users will open, use, and return to a mobile app in daily situations, not just approve the idea conceptually. - Useful for consumer-facing or field-use MVPs

Apps involving notifications, on-the-go actions, or simple flows benefit from early mobile validation before investing in deeper backend complexity.

FlutterFlow is about validating mobile behavior, not backend sophistication.

Glide for Internal and Workflow MVPs

Glide is strongest when validating internal tools, operational workflows, or adoption inside real teams.

- Best for testing internal workflows quickly

Glide MVPs validate whether teams actually use the tool during daily work, revealing friction, resistance, or immediate value clearly. - Exposes adoption risk early

Internal validation shows whether a tool saves time or adds steps, which is impossible to learn from demos or stakeholder approval alone.

Glide is ideal when workflow fit matters more than customization.

Webflow or Simple Web MVPs for Demand Testing

Sometimes the fastest validation does not require an app at all. A focused web MVP is often enough to test clarity and demand.

- Best for testing messaging and core value

Simple web MVPs validate whether users understand the problem and are willing to act without distractions from features or technology. - Reduces build time and learning cost

When demand is the main unknown, a lightweight approach works best. This web MVP development guide explains when simplicity wins.

Web MVPs help you learn before committing to product complexity.

Choosing the right platform keeps validation honest. When tools match validation goals, you learn faster, avoid false confidence, and make better build decisions.

What Metrics Actually Validate a Startup Idea

Metrics only matter when they help you make a hard decision. Many founders track growth-style numbers that look positive but hide weak demand. Validation metrics exist to confirm real behavior change, not surface-level interest.

The right metrics tell you whether to continue, pivot, or stop.

- Activation matters more than raw sign-ups

Sign-ups only show curiosity. Activation shows users reached the core value moment, completed a meaningful action, and experienced the problem being solved in practice. - Behavior is stronger than stated interest

Feedback can be polite or misleading. Actions like completing workflows, submitting real data, or using the product without reminders reveal genuine intent and relevance. - Repeat usage signals problem frequency

When users return on their own, it proves the problem occurs regularly. One-time use usually means the issue is occasional or not painful enough. - Willingness to pay is the clearest validation signal

Payments, deposits, or paid pilots show urgency. Even small amounts prove users value the solution enough to exchange money, not just time. - Negative data can still mean validation success

Fast rejection, churn, or drop-offs save months of effort. Learning an idea fails early prevents deeper investment and is still a successful validation outcome.

Strong validation metrics replace hope with evidence. When metrics are chosen correctly, they give you confidence to move forward or stop without second-guessing.

How Long Validation Should Take (and When to Stop)

When you validate startup ideas, speed matters, but discipline matters more. Low-code MVP validation is designed to shorten learning cycles, not to drag decisions out. Without timelines and stop rules, teams either quit too early or keep validating forever without clarity.

Good validation has a defined window and clear decision criteria.

- Low-code MVP validation usually takes weeks, not months

Most teams can validate startup ideas within two to six weeks using low-code MVPs, enough time to observe activation, behavior patterns, and real usage signals. - Set clear success and failure thresholds before you validate startup ideas

Define metrics like activation rates, repeat usage, or willingness to pay in advance so low-code MVP validation leads to objective decisions, not emotional debates. - Iterate when low-code MVP validation signals are mixed but promising

If users engage but struggle, improve flows, messaging, or onboarding. Iteration makes sense when the problem is validated but execution needs refinement. - Pivot when the problem is validated but the solution is not

If low-code MVP validation confirms the pain but users avoid your approach, pivot the solution while keeping the validated problem and target user intact. - Stop when validation clearly disproves the startup idea

When users do not activate, return, or pay despite honest attempts, stopping early is a successful outcome of validating startup ideas properly.

For a structured view of how teams run these cycles, this MVP development process explains how low-code MVP validation fits into real product decisions.

Validation should end with clarity. When you validate startup ideas correctly, you know whether to iterate, pivot, or stop without second-guessing.

Cost of Validating Ideas with Low-code MVPs

Cost directly affects how honestly founders validate ideas. When validation is expensive, teams avoid testing or commit too early. Low-code MVPs lower the cost of learning, while traditional development raises the stakes before demand is proven.

The difference is not just price. It is risk.

Low-code MVP Validation Costs

Low-code MVPs are designed to minimize upfront commitment while maximizing learning speed. You pay for clarity first, not scale.

- Typical low-code MVP validation ranges from $20k to $45k

Most validation-focused low-code MVPs fall in this range, depending on workflow complexity, integrations, and how realistic the product needs to feel for users. - Costs increase only after validation succeeds

You invest more only when demand is proven. Scaling to a full version happens intentionally, based on real signals, not assumptions. - Lower cost enables multiple validation cycles

Affordable validation allows iteration, pivots, or restarts without draining capital, making it easier to find a real opportunity before committing long term.

Traditional MVP and Custom Development Costs

Traditional development treats validation like a production build, increasing cost and risk before learning is complete.

- Custom MVP development usually starts at $40k to $80k

Even basic MVPs require architecture, engineering setup, and polish, pushing costs higher before demand or behavior is confirmed. - Full versions often reach $120k to $180k or more

Scaling a traditionally built product multiplies cost early, forcing founders to commit heavily before knowing if the idea truly works. - High upfront cost increases emotional and financial risk

When more money is spent early, teams push forward despite weak signals, turning validation into justification instead of learning.

For a detailed breakdown, this comparison of MVP development cost: low-code vs custom explains how teams evaluate validation spend realistically.

Lower-cost validation leads to better decisions. When learning is affordable, founders stay flexible and avoid betting big on unproven ideas.

Real-World Examples of MVP-Led Validation

Many successful products did not start as full platforms. They started narrow, focused on one risk, and used MVPs to test demand before committing further. These examples show how MVP-led validation creates clarity early and prevents expensive mistakes.

The goal was not scale. The goal was learning.

MVPs Built Only to Test Demand

Some MVPs exist only to answer one question: will anyone actually use this?

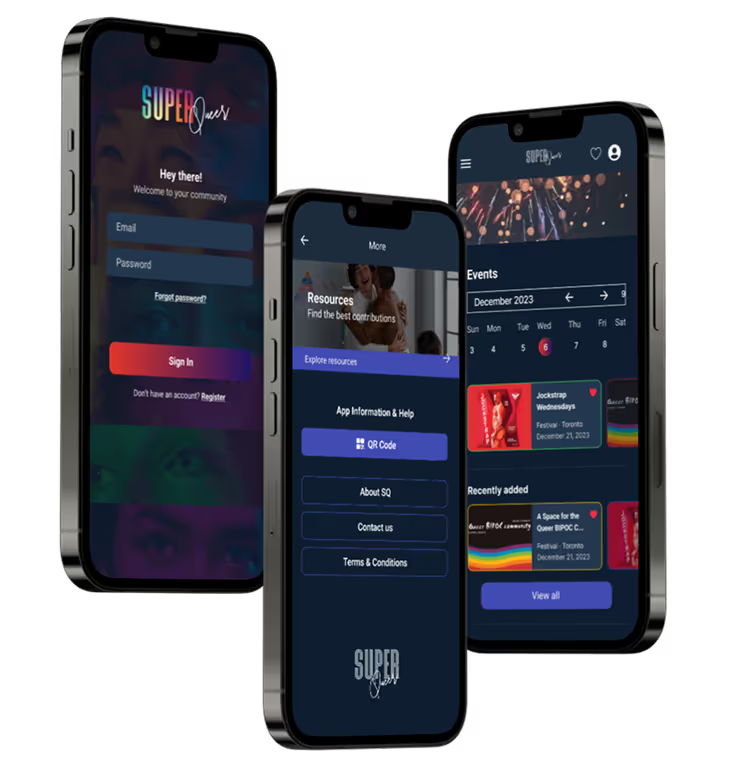

- SuperQueer started as a focused community MVP

The MVP validated whether users would actively engage with events and shared resources. Early engagement confirmed demand before expanding into a larger global platform. - RentFund validated willingness to adopt a new payment model

The MVP focused only on rent verification and rewards. Early usage proved landlords and tenants would change behavior, justifying further product investment.

These MVPs proved demand before adding features.

What Founders Learned from Early Signals

Early signals often challenge assumptions and reshape direction.

- BarEssay validated problem urgency, not feature depth

Early users engaged deeply with feedback features, proving exam preparation pain was real. This signal mattered more than interface polish or secondary tools. - CareerNerds replaced spreadsheets before scaling workflows

The MVP showed coaches actively abandoning manual tools. This confirmed workflow pain and justified expanding automation and role-based features later.

Learning came from behavior, not opinions.

Why Many Successful Products Started Narrow

Starting narrow reduces risk and sharpens signals.

- Internal tool MVPs like GL Hunt focused on one workflow

By validating scheduling and documentation first, the team confirmed adoption inside real operations before expanding dashboards and integrations. - Known.dev validated customer self-service early

The MVP tested whether customers would track shipments independently. Reduced support requests proved value before deeper automation investment.

Narrow scope made validation clear and honest.

If you want more structured examples, these MVP case studies show how teams validated ideas before scaling. For SaaS-specific paths, this SaaS MVP development guide explains how early validation shapes long-term product direction.

Most strong products begin small on purpose. MVP-led validation helps founders earn confidence through evidence, not assumptions.

Common Mistakes That Break MVP Validation

Most failed MVPs are not technical failures. They fail because validation was approached with the wrong mindset. Teams often move fast, but in the wrong direction, collecting noise instead of clear signals. These mistakes give false confidence and delay hard decisions.

Avoiding them is as important as building the MVP itself.

- Building too much too early

Adding extra features, edge cases, or polish hides weak demand signals. Overbuilt MVPs make it harder to see what users actually value and why. - Ignoring negative signals and weak behavior

Founders often explain away drop-offs, low activation, or churn. Negative signals are still valid data and usually point to deeper problems worth addressing early. - Validating features instead of the core problem

Testing whether users like features misses the point. Validation should prove the problem is real, painful, and frequent enough to justify any solution. - Confusing engagement with real demand

Clicks, likes, or short-term usage feel positive but may not indicate need. Demand shows up through repeat use, behavior change, or willingness to pay.

If you want a deeper breakdown of these patterns, this guide on MVP development challenges and mistakes explains why many validation efforts fail despite good intentions.

Good validation feels uncomfortable because it challenges assumptions. Avoiding these mistakes helps you learn faster and make decisions with clarity instead of hope.

What to Do After Validation Succeeds (or Fails)

Validation is not the finish line. It is a decision point. What matters most is how you act on the signals you receive. Strong founders treat validation results as progress, even when the answer is no, because clarity saves time and capital.

The mistake is not failure. The mistake is ignoring what validation tells you.

- Invest in full product development when demand is clearly proven

When users activate, return, and show willingness to pay, it is a signal to invest deeper, improve reliability, and plan for scale with confidence. - Pivot when the problem is real but the direction is wrong

If users confirm the pain but avoid your solution, pivot the audience, workflow, or positioning while keeping the validated problem intact. - Walk away when core assumptions are clearly disproven

When repeated tests show low activation, weak retention, or no urgency, stopping early is a smart outcome that prevents long-term waste. - Treat validation as progress, not success or failure

Validation gives you answers. Clear no answers are just as valuable as yes answers because they protect focus and unlock better opportunities.

If you want to move from validation into execution with structure, this guide on how to develop a successful minimum viable product explains how teams build with confidence after learning.

Validation succeeds when it removes doubt. Whether you build, pivot, or stop, clear decisions mean you are moving forward, not backward.

Why Founders Choose LowCode Agency to Build MVPs

Founders work with LowCode Agency when they want clarity before commitment.

At LowCode Agency, we are a strategic product team that designs, builds, and evolves MVPs meant to validate ideas, not impress prematurely. We focus on learning fast, reducing risk, and helping you make the right next decision.

We focus on learning, not hype.

- We operate as a strategic product team, not a delivery shop

We help you define the problem, risky assumptions, validation goals, and success criteria before building, so your MVP answers real questions instead of showcasing features. - We build MVPs using a validation-first low-code stack

We use Bubble, FlutterFlow, Glide, and AI automation tools like Make and n8n to test real workflows, logic, and user behavior without overengineering early. - We have shipped 350+ products across real business environments

Our experience spans SaaS, internal tools, marketplaces, and automation systems, giving us pattern recognition on what actually validates demand versus what creates false confidence. - We reduce risk compared to traditional agencies

Traditional agencies optimize for scope and delivery. We optimize for learning speed, lower upfront cost, and clear signals before you commit to scale or long-term architecture. - We support decisions after validation, not just builds

When validation succeeds, we help evolve the product. When it fails, we help you pivot or stop with clarity, protecting capital and founder focus.

If you are deciding whether your idea is worth building, the right next step is a conversation. Let’s discuss what your MVP should validate before you invest further.

Conclusion

Validation does not limit creativity. It protects it. By validating early, you reduce risk while giving yourself space to explore ideas that actually matter to users, not just sound good internally.

Low-code MVPs are not built to impress investors or look polished. They exist to help you learn fast, test assumptions honestly, and make decisions before costs and complexity grow.

Clear signals always beat big launches. When you focus on learning first, you build products with confidence, direction, and far fewer regrets.

Created on

December 31, 2025

. Last updated on

December 31, 2025

.

FAQs

Can you validate a startup idea without building a full product?

How small should a low-code MVP be for validation?

Is low-code MVP validation reliable for SaaS ideas?

How long does it take to validate a startup idea using a low-code MVP?

What metrics matter most when validating an MVP?

What should you do if your low-code MVP fails validation?

![How to create an app without coding [2024] - A step-by-step guide](https://cdn.prod.website-files.com/61cbe22a00483909d327afc6/66393c22233f2e021d6b03d8_65eb47491f12048733942683_Slide%252016_9%2520-%25207.avif)